Bachelor project carried out with Dr. O.P. Verma during the final year of my studies at Delhi Technological University (with 3 other classmates). Published at ICRAI, 2017

Motivation

During this project we explored the viability of a camera based approach for navigation, without relying on expensive hardware.

The motivation was two fold:

- Humans do not have a specialized hardware like LIDAR for sensing our environment, we get by with our eyes. Logically the robots we design should do so too.

- In comparison to cameras, LIDAR is expensive in terms of manufacturing as well as operation

Approach

We had the basic idea to use a CNN (Convolutional Neural Network) to extract information from our scene and interface its output with a path planner. For the project's scope, we decided to generate a scene depth map from the CNN, and use it for path planning.

Testing our approach with actual vehicles was not viable due to financial & safety reasons. Real world datasets available were not large enough to train a deep neural network, and making one would have been too expensive

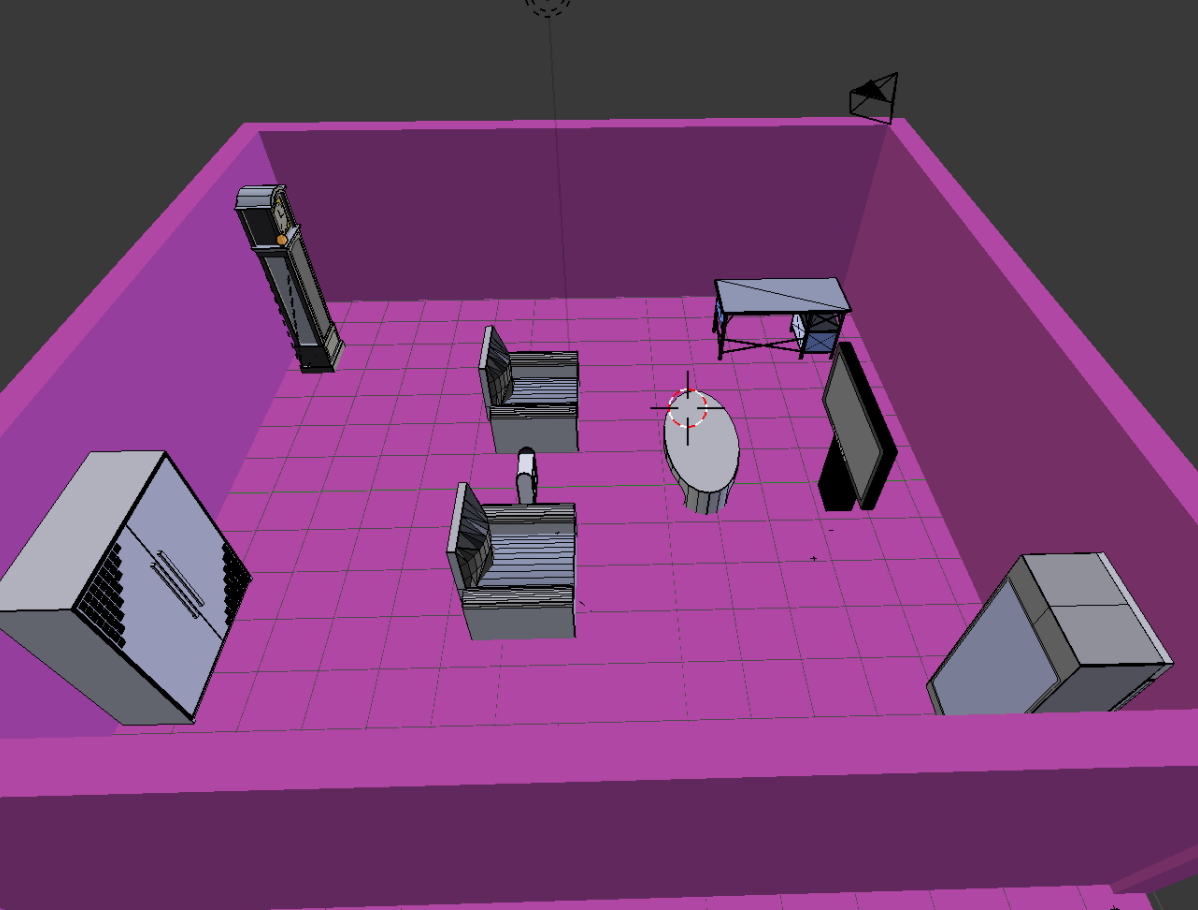

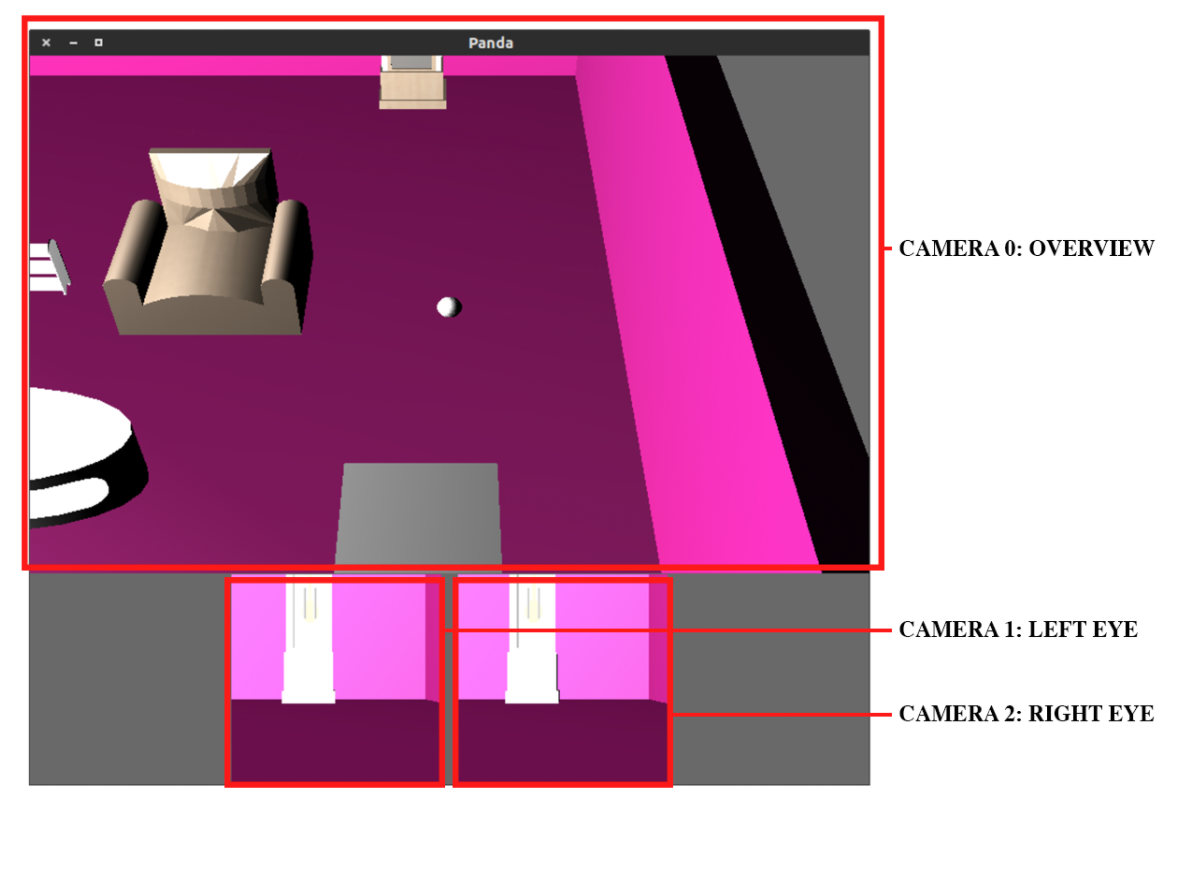

Due to these constraints we implemented our autonomous agent in a simulation (made using Panda3D). We used Shapenet (3D object database) for obstacles in the environment.

Scene Depth Estimation

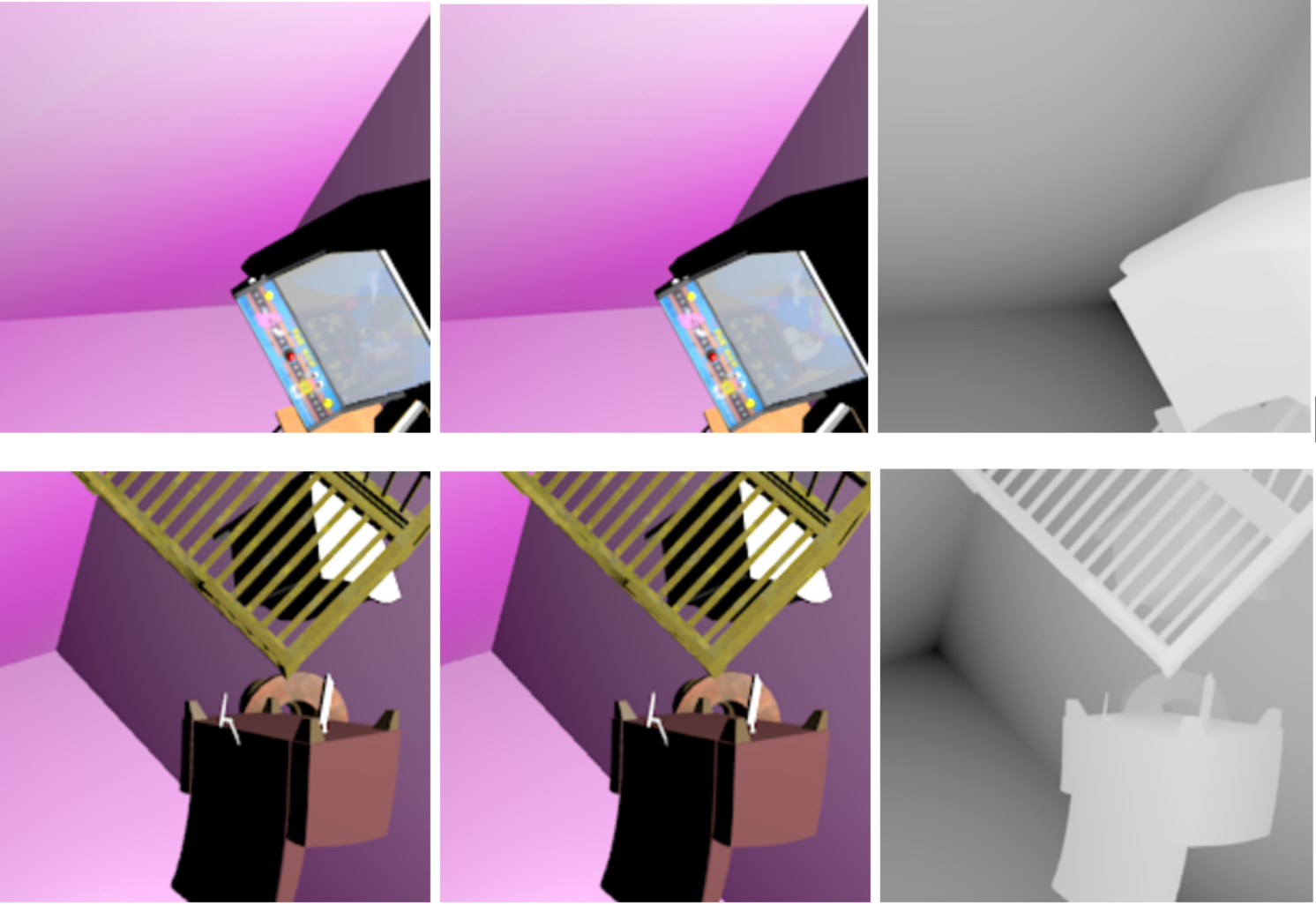

We used Shapenet to artificially generate a dataset for scene depth estimation. We selected stereo image input instead of mono, so that more information about scene depth reaches the network. (See: Stereopsis)

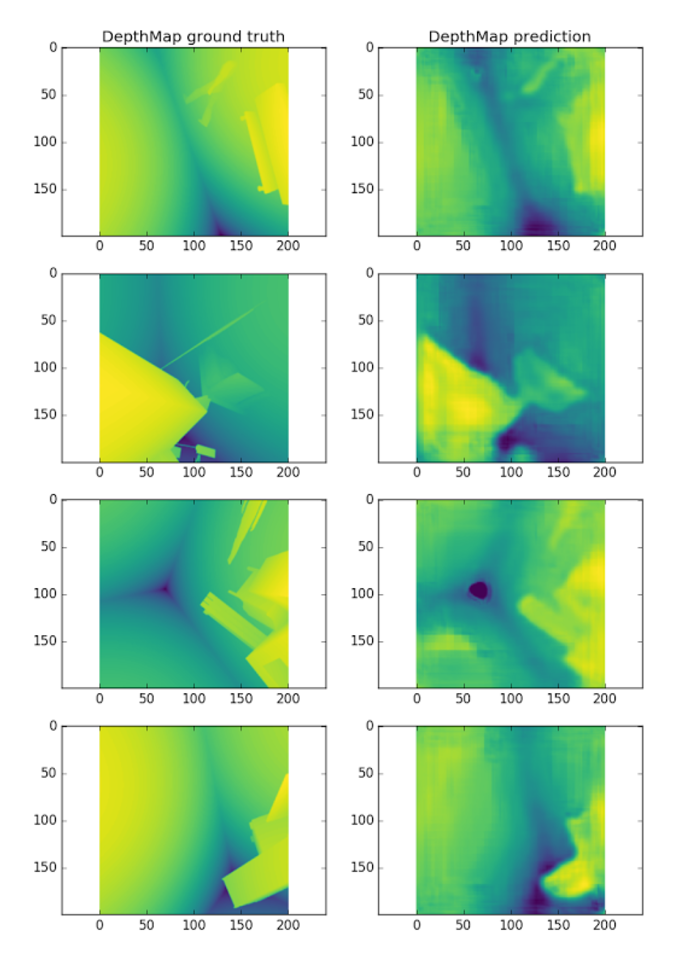

Armed with our cheaply generated dataset, we trained a CNN which produced a depth map of the scene in view given stereo input.

Path Planning

The path planner discretized the environment into 1x1 blocks and used A* algorithm to search for the destination block. Moves allowed for the agent: move forward by 1 cube and rotate by 45 degrees.

For short term path planning (going to the next cube) we used the CNN on the in game camera input for obstacle detection. If depth of the scene in front of the agent was less than a certain threshhold, the path was deemed unsafe.

We evaluated our agent on different A* heuristics for pathfinding and baselined it with human performance on same task. For humans, the overview camera was hidden and they were presented with a direction prompt about the destination block, as well as a marker on the desination.

Conclusion & Next Steps

We were able to demonstrate that navigation without using specialized hardware could be possible with applications in small robots and vehicles. However, using heuristics in real world scenarios would not scale well. Thus, integrating the CNN with a SLAM algorithm would be the next step to test out this approach in actual vehicles.

Our CNN did not understand higher level constructs like objects, it was only trained on the low level task of estimating pixel depth. An approach to train the network for the end to end navigation task wtihout a path planning algorithms, is another future direction of this research.

Our paper regarding this project was accepted at International Conference on Robotics and Artificial Intelligence and published in December 2017. Check out our code here.